history = {}

x = state.x

for step in range(20):

log.info(f'TRAIN STEP: {step}')

x, metrics = ptExpSU3.trainer.train_step((x, state.beta))

if (step > 0 and step % 2 == 0):

print_dict(metrics, grab=True)

if (step > 0 and step % 1 == 0):

for key, val in metrics.items():

try:

history[key].append(val)

except KeyError:

history[key] = [val]

[10/04/23 08:07:05][INFO][2642635469.py:4] - TRAIN STEP: 0

[10/04/23 08:07:05][INFO][2642635469.py:4] - TRAIN STEP: 1

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 2

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-20.11355156 -13.83759796 -4.51987377 -11.45297864]

[-19.63407248 -12.82094906 -3.89140218 -11.38944608]

[-18.91054695 -12.57901193 -3.60837652 -10.80563673]]

logprob: torch.Size([3, 4]) torch.float64

[[-20.11355156 -13.83759796 -4.51987377 -11.45297864]

[-19.5349711 -12.66848527 -3.6937792 -11.31223158]

[-18.98136385 -12.5501776 -3.58081924 -10.80513544]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.09910138 -0.15246379 -0.19762298 -0.0772145 ]

[ 0.07081689 -0.02883433 -0.02755728 -0.00050128]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.09910138 -0.15246379 -0.19762298 -0.0772145 ]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[0. 0. 0. 0. ]

[0. 0. 0. 0. ]

[0.16991827 0.12362946 0.17006571 0.07671321]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.09910138 -0.15246379 -0.19762298 -0.0772145 ]

[ 0.07081689 -0.02883433 -0.02755728 -0.00050128]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.32232732 0.2759818 0.39099734 0.52317294]

sumlogdet: torch.Size([4]) torch.float64

[ 0.07081689 -0. -0.02755728 -0.00050128]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[1. 0. 1. 1.]

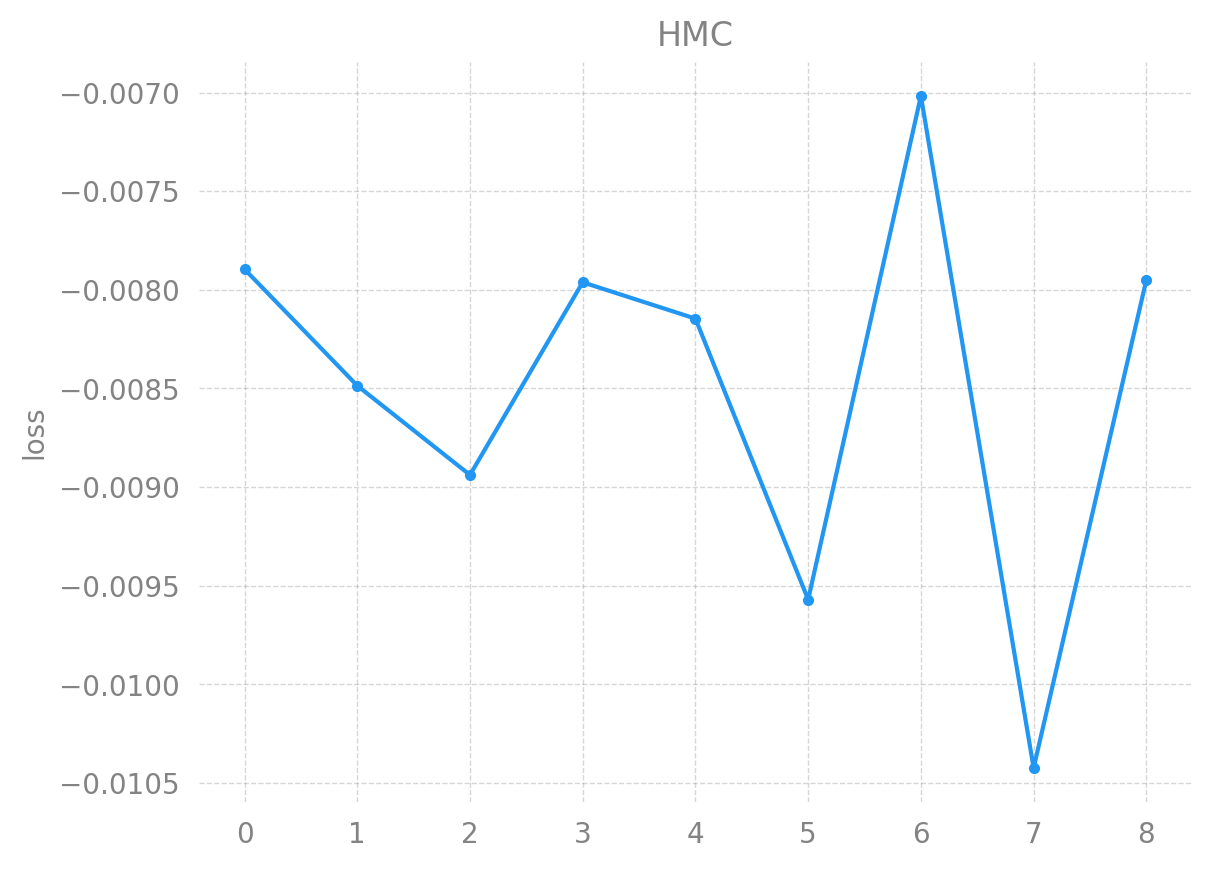

loss: None None

-0.009001684302756496

plaqs: torch.Size([4]) torch.float64

[0.36183972 0.43746352 0.02919545 0.2244051 ]

sinQ: torch.Size([4]) torch.float64

[ 0.01845919 -0.00937014 0.00653818 0.03579483]

intQ: torch.Size([4]) torch.float64

[ 0.00105205 -0.00053403 0.00037263 0.00204006]

dQint: torch.Size([4]) torch.float64

[0.00068181 0. 0.00033711 0.001986 ]

dQsin: torch.Size([4]) torch.float64

[0.01196306 0. 0.00591496 0.0348463 ]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 3

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 4

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-16.99217625 -18.05099071 -10.04985909 -7.1340411 ]

[-16.61047626 -17.84035555 -9.68866118 -7.14912011]

[-16.28336399 -18.36191548 -9.14330041 -5.86613539]]

logprob: torch.Size([3, 4]) torch.float64

[[-16.99217625 -18.05099071 -10.04985909 -7.1340411 ]

[-16.4708106 -17.6654387 -9.64363079 -7.10061592]

[-16.17915148 -18.35588077 -9.12314899 -5.81814653]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.13966566 -0.17491685 -0.04503039 -0.04850419]

[-0.10421251 -0.0060347 -0.02015142 -0.04798886]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.13966566 -0.17491685 -0.04503039 -0.04850419]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[0. 0. 0. 0. ]

[0. 0. 0. 0. ]

[0.03545315 0.16888215 0.02487897 0.00051533]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.13966566 -0.17491685 -0.04503039 -0.04850419]

[-0.10421251 -0.0060347 -0.02015142 -0.04798886]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.44351451 1. 0.39585389 0.26823426]

sumlogdet: torch.Size([4]) torch.float64

[-0.10421251 -0.0060347 -0. -0. ]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[1. 1. 0. 0.]

loss: None None

-0.014134476436103167

plaqs: torch.Size([4]) torch.float64

[0.46996628 0.45118214 0.18011337 0.30434348]

sinQ: torch.Size([4]) torch.float64

[ 0.03170589 -0.01147666 0.05118318 -0.00268528]

intQ: torch.Size([4]) torch.float64

[ 0.00180702 -0.00065409 0.00291709 -0.00015304]

dQint: torch.Size([4]) torch.float64

[0.00065855 0.00207145 0. 0. ]

dQsin: torch.Size([4]) torch.float64

[0.01155495 0.03634551 0. 0. ]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 5

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 6

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-22.17358114 -18.47427308 -2.95308721 -11.62559496]

[-21.83570903 -18.22818944 -2.4429929 -11.20037746]

[-21.81748065 -18.37604145 -2.70650275 -10.07465326]]

logprob: torch.Size([3, 4]) torch.float64

[[-22.17358114 -18.47427308 -2.95308721 -11.62559496]

[-21.84417608 -18.06424191 -2.60692839 -11.12021881]

[-21.89172921 -18.39051785 -2.62673381 -10.08631078]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.00846706 -0.16394753 0.16393549 -0.08015865]

[ 0.07424856 0.0144764 -0.07976894 0.01165751]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.00846706 -0.16394753 0.16393549 -0.08015865]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0.0657815 0.17842393 -0.24370443 0.09181616]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.00846706 -0.16394753 0.16393549 -0.08015865]

[ 0.07424856 0.0144764 -0.07976894 0.01165751]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.75438538 0.91965634 0.72155015 0.21453462]

sumlogdet: torch.Size([4]) torch.float64

[ 0.07424856 0.0144764 -0.07976894 0.01165751]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[1. 1. 1. 1.]

loss: None None

-0.019461443124629982

plaqs: torch.Size([4]) torch.float64

[0.43455592 0.53410535 0.15486684 0.30434348]

sinQ: torch.Size([4]) torch.float64

[-0.01746029 0.01216864 0.01077427 -0.00268528]

intQ: torch.Size([4]) torch.float64

[-0.00099512 0.00069353 0.00061406 -0.00015304]

dQint: torch.Size([4]) torch.float64

[0.0016496 0.00074394 0.00087095 0.0014133 ]

dQsin: torch.Size([4]) torch.float64

[0.02894377 0.01305311 0.01528163 0.02479769]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 7

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 8

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-28.94977982 -23.93414904 -5.02251262 -20.14381224]

[-28.26856486 -24.03288661 -4.19892196 -20.37397293]

[-27.78459946 -23.56989642 -3.56347647 -20.06837893]]

logprob: torch.Size([3, 4]) torch.float64

[[-28.94977982 -23.93414904 -5.02251262 -20.14381224]

[-28.25995613 -23.85544268 -4.41858446 -20.21769226]

[-27.77851779 -23.59675098 -3.59362754 -20.09340966]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.00860873 -0.17744392 0.2196625 -0.15628067]

[-0.00608167 0.02685456 0.03015107 0.02503073]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.00860873 -0.17744392 0.2196625 -0.15628067]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0.00252705 0.20429848 -0.18951143 0.1813114 ]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.00860873 -0.17744392 0.2196625 -0.15628067]

[-0.00608167 0.02685456 0.03015107 0.02503073]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.3099755 0.71362471 0.23957588 0.95084655]

sumlogdet: torch.Size([4]) torch.float64

[-0. 0. 0. 0.02503073]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[0. 0. 0. 1.]

loss: None None

-0.015584381253787876

plaqs: torch.Size([4]) torch.float64

[0.7403035 0.65171197 0.31634181 0.47457693]

sinQ: torch.Size([4]) torch.float64

[-0.00859781 -0.00165773 -0.00396142 0.00092218]

intQ: torch.Size([4]) torch.float64

[-4.90016318e-04 -9.44793472e-05 -2.25773828e-04 5.25577319e-05]

dQint: torch.Size([4]) torch.float64

[0. 0. 0. 0.00032025]

dQsin: torch.Size([4]) torch.float64

[0. 0. 0. 0.00561914]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 9

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 10

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-31.39076793 -27.31985011 -19.54217484 -21.1104468 ]

[-31.0614939 -27.06503105 -19.07066872 -21.3910664 ]

[-30.84961158 -26.95602155 -18.69349894 -20.00516103]]

logprob: torch.Size([3, 4]) torch.float64

[[-31.39076793 -27.31985011 -19.54217484 -21.1104468 ]

[-31.06645234 -26.87717262 -19.24520362 -21.21648217]

[-30.82929486 -26.99061806 -18.65210819 -20.00045772]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.00495844 -0.18785843 0.1745349 -0.17458422]

[-0.02031672 0.03459652 -0.04139075 -0.00470331]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.00495844 -0.18785843 0.1745349 -0.17458422]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[-0.02527516 0.22245494 -0.21592565 0.16988091]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.00495844 -0.18785843 0.1745349 -0.17458422]

[-0.02031672 0.03459652 -0.04139075 -0.00470331]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.57036826 0.71947605 0.41062839 0.32956256]

sumlogdet: torch.Size([4]) torch.float64

[-0.02031672 0.03459652 -0.04139075 -0. ]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[1. 1. 1. 0.]

loss: None None

-0.011982253415392767

plaqs: torch.Size([4]) torch.float64

[0.7403035 0.65171197 0.45503328 0.62236101]

sinQ: torch.Size([4]) torch.float64

[-0.00859781 -0.00165773 -0.00088107 0.01924983]

intQ: torch.Size([4]) torch.float64

[-4.90016318e-04 -9.44793472e-05 -5.02151834e-05 1.09710862e-03]

dQint: torch.Size([4]) torch.float64

[0.00043531 0.00061571 0.00043569 0. ]

dQsin: torch.Size([4]) torch.float64

[0.00763793 0.01080326 0.00764459 0. ]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 11

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 12

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-31.42781825 -21.38032064 -19.16557544 -24.26085164]

[-30.89428362 -21.22321069 -18.90945902 -23.55378881]

[-30.46921912 -20.86892045 -18.93287679 -23.36450964]]

logprob: torch.Size([3, 4]) torch.float64

[[-31.42781825 -21.38032064 -19.16557544 -24.26085164]

[-30.88117485 -21.00988585 -19.11822465 -23.54366138]

[-30.55010401 -20.84704698 -19.01058406 -23.31072478]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.01310877 -0.21332485 0.20876563 -0.01012743]

[ 0.08088489 -0.02187348 0.07770727 -0.05378486]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.01310877 -0.21332485 0.20876563 -0.01012743]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0.09399365 0.19145137 -0.13105835 -0.04365743]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.01310877 -0.21332485 0.20876563 -0.01012743]

[ 0.08088489 -0.02187348 0.07770727 -0.05378486]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.41573209 0.58668123 0.85642256 0.38669196]

sumlogdet: torch.Size([4]) torch.float64

[ 0.08088489 -0. 0.07770727 -0. ]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[1. 0. 1. 0.]

loss: None None

-0.016004372395409854

plaqs: torch.Size([4]) torch.float64

[0.84037172 0.65154716 0.42313337 0.73863172]

sinQ: torch.Size([4]) torch.float64

[ 1.47285038e-03 -1.56397921e-03 -6.09823914e-03 -6.72724198e-05]

intQ: torch.Size([4]) torch.float64

[ 8.39424059e-05 -8.91361261e-05 -3.47557954e-04 -3.83406818e-06]

dQint: torch.Size([4]) torch.float64

[1.23274406e-05 0.00000000e+00 5.10271910e-04 0.00000000e+00]

dQsin: torch.Size([4]) torch.float64

[0.0002163 0. 0.00895321 0. ]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 13

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 14

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-29.59240183 -21.94551736 -28.47707495 -20.59708329]

[-29.48303373 -21.10556806 -28.05877271 -20.58985545]

[-29.39854899 -20.73632654 -27.22089443 -19.77844503]]

logprob: torch.Size([3, 4]) torch.float64

[[-29.59240183 -21.94551736 -28.47707495 -20.59708329]

[-29.40940421 -20.98154179 -28.17860182 -20.541757 ]

[-29.31460821 -20.72420952 -27.17276265 -19.77710774]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.07362952 -0.12402627 0.11982911 -0.04809845]

[-0.08394078 -0.01211702 -0.04813178 -0.00133729]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.07362952 -0.12402627 0.11982911 -0.04809845]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[-0.01031126 0.11190925 -0.16796089 0.04676117]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.07362952 -0.12402627 0.11982911 -0.04809845]

[-0.08394078 -0.01211702 -0.04813178 -0.00133729]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.75745313 0.2948443 0.27135909 0.44044242]

sumlogdet: torch.Size([4]) torch.float64

[-0.08394078 -0.01211702 -0.04813178 -0.00133729]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[1. 1. 1. 1.]

loss: None None

-0.012984717158635528

plaqs: torch.Size([4]) torch.float64

[0.75491793 0.6547223 0.63304782 0.73863172]

sinQ: torch.Size([4]) torch.float64

[ 3.85535809e-03 -1.41124889e-02 -1.46423245e-02 -6.72724198e-05]

intQ: torch.Size([4]) torch.float64

[ 2.19729063e-04 -8.04315419e-04 -8.34512428e-04 -3.83406817e-06]

dQint: torch.Size([4]) torch.float64

[0.00065824 0.00058615 0.00017334 0.00014198]

dQsin: torch.Size([4]) torch.float64

[0.01154945 0.01028462 0.00304149 0.00249122]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 15

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 16

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-27.90336074 -24.58447835 -27.70507628 -25.1095963 ]

[-27.4557147 -23.38939397 -26.86035304 -24.74188789]

[-26.76730611 -23.12311187 -26.13507215 -24.81611966]]

logprob: torch.Size([3, 4]) torch.float64

[[-27.90336074 -24.58447835 -27.70507628 -25.1095963 ]

[-27.42721443 -23.25741435 -27.03208626 -24.69073936]

[-26.83629231 -23.14150881 -26.14979085 -24.84522864]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.02850027 -0.13197962 0.17173322 -0.05114852]

[ 0.0689862 0.01839694 0.0147187 0.02910898]]

sldf: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.02850027 -0.13197962 0.17173322 -0.05114852]

[ 0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[ 0.09748647 0.15037655 -0.15701452 0.0802575 ]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[-0.02850027 -0.13197962 0.17173322 -0.05114852]

[ 0.0689862 0.01839694 0.0147187 0.02910898]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.34401554 0.23622524 0.21112911 0.76769124]

sumlogdet: torch.Size([4]) torch.float64

[0.0689862 0. 0.0147187 0.02910898]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[1. 0. 1. 1.]

loss: None None

-0.011869373548195221

plaqs: torch.Size([4]) torch.float64

[0.82255532 0.72111137 0.69612456 0.72896623]

sinQ: torch.Size([4]) torch.float64

[-0.00564947 -0.00444414 -0.01693866 0.00255894]

intQ: torch.Size([4]) torch.float64

[-0.00032198 -0.00025329 -0.00096539 0.00014584]

dQint: torch.Size([4]) torch.float64

[8.49293564e-05 0.00000000e+00 3.84992539e-04 3.15784764e-04]

dQsin: torch.Size([4]) torch.float64

[0.00149017 0. 0.00675506 0.00554075]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 17

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 18

[10/04/23 08:07:06][INFO][common.py:97] - energy: torch.Size([3, 4]) torch.float64

[[-28.74207541 -24.8965513 -32.81235279 -22.59228204]

[-27.98828099 -23.91465789 -32.28912627 -22.61626646]

[-27.23785728 -24.01606216 -31.51716178 -21.73978358]]

logprob: torch.Size([3, 4]) torch.float64

[[-28.74207541 -24.8965513 -32.81235279 -22.59228204]

[-28.07501629 -23.97865766 -32.35996206 -22.61968433]

[-27.18635944 -23.93497767 -31.62224319 -21.75485085]]

logdet: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.0867353 0.06399977 0.07083579 0.00341787]

[-0.05149783 -0.08108449 0.10508142 0.01506727]]

sldf: torch.Size([3, 4]) torch.float64

[[0. 0. 0. 0. ]

[0.0867353 0.06399977 0.07083579 0.00341787]

[0. 0. 0. 0. ]]

sldb: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0. 0. 0. 0. ]

[-0.13823313 -0.14508427 0.03424563 0.0116494 ]]

sld: torch.Size([3, 4]) torch.float64

[[ 0. 0. 0. 0. ]

[ 0.0867353 0.06399977 0.07083579 0.00341787]

[-0.05149783 -0.08108449 0.10508142 0.01506727]]

xeps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

veps: torch.Size([3]) torch.float64

[0.1 0.1 0.1]

acc: torch.Size([4]) torch.float64

[0.21103823 0.38229083 0.30418792 0.43282093]

sumlogdet: torch.Size([4]) torch.float64

[-0. -0.08108449 0. 0.01506727]

beta: torch.Size([]) torch.float64

6.0

acc_mask: torch.Size([4]) torch.float32

[0. 1. 0. 1.]

loss: None None

-0.00985098143962743

plaqs: torch.Size([4]) torch.float64

[0.85381281 0.70579842 0.80681173 0.75974167]

sinQ: torch.Size([4]) torch.float64

[-0.00332841 -0.00489329 -0.01271997 0.00506889]

intQ: torch.Size([4]) torch.float64

[-0.0001897 -0.00027888 -0.00072495 0.00028889]

dQint: torch.Size([4]) torch.float64

[0. 0.00045467 0. 0.00140838]

dQsin: torch.Size([4]) torch.float64

[0. 0.00797764 0. 0.02471136]

[10/04/23 08:07:06][INFO][2642635469.py:4] - TRAIN STEP: 19